반응형

반응형

- 환경 OS : Centos7

- 참고문서

Installing the NVIDIA Container Toolkit — NVIDIA Container Toolkit 1.16.0 documentation

You installed a supported container engine (Docker, Containerd, CRI-O, Podman).

docs.nvidia.com

- 자세한 설치 방법

https://github.com/NVIDIA/k8s-device-plugin/tree/v0.16.0?tab=readme-ov-file

GitHub - NVIDIA/k8s-device-plugin: NVIDIA device plugin for Kubernetes

NVIDIA device plugin for Kubernetes. Contribute to NVIDIA/k8s-device-plugin development by creating an account on GitHub.

github.com

- 패키지 설치

- 프로덕션 저장소 등록

curl -s -L https://nvidia.github.io/libnvidia-container/stable/rpm/nvidia-container-toolkit.repo | \

sudo tee /etc/yum.repos.d/nvidia-container-toolkit.repo

- NVIDIA Container Toolkit

sudo yum install -y nvidia-container-toolkit

- Containerd 설정

- 기본 config.toml

[plugins."io.containerd.grpc.v1.cri".containerd] default_runtime_name = "runc" disable_snapshot_annotations = true discard_unpacked_layers = false ignore_rdt_not_enabled_errors = false no_pivot = false snapshotter = "overlayfs" [plugins."io.containerd.grpc.v1.cri".containerd.default_runtime] base_runtime_spec = "" cni_conf_dir = "" cni_max_conf_num = 0 container_annotations = [] pod_annotations = [] privileged_without_host_devices = false runtime_engine = "" runtime_path = "" runtime_root = "" runtime_type = "" [plugins."io.containerd.grpc.v1.cri".containerd.default_runtime.options] [plugins."io.containerd.grpc.v1.cri".containerd.runtimes] [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc] base_runtime_spec = "" cni_conf_dir = "" cni_max_conf_num = 0 container_annotations = [] pod_annotations = [] privileged_without_host_devices = false runtime_engine = "" runtime_path = "" runtime_root = "" runtime_type = "io.containerd.runc.v2"- 수정 config.toml

[plugins."io.containerd.grpc.v1.cri".containerd]

default_runtime_name = "nvidia"

#default_runtime_name = "runc"

disable_snapshot_annotations = true

discard_unpacked_layers = false

ignore_rdt_not_enabled_errors = false

no_pivot = false

snapshotter = "overlayfs"

[plugins."io.containerd.grpc.v1.cri".containerd.default_runtime]

base_runtime_spec = ""

cni_conf_dir = ""

cni_max_conf_num = 0

container_annotations = []

pod_annotations = []

privileged_without_host_devices = false

runtime_engine = ""

runtime_path = ""

runtime_root = ""

runtime_type = ""

[plugins."io.containerd.grpc.v1.cri".containerd.default_runtime.options]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.nvidia]

privileged_without_host_devices = false

runtime_engine = ""

runtime_root = ""

runtime_type = "io.containerd.runc.v2"

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.nvidia.options]

BinaryName = "/usr/bin/nvidia-container-runtime"

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

base_runtime_spec = ""

cni_conf_dir = ""

cni_max_conf_num = 0

container_annotations = []

pod_annotations = []

privileged_without_host_devices = false

runtime_engine = ""

- NVIDIA Plugin 설치

kubectl create -f https://raw.githubusercontent.com/NVIDIA/k8s-device-plugin/v0.14.3/nvidia-device-plugin.yml

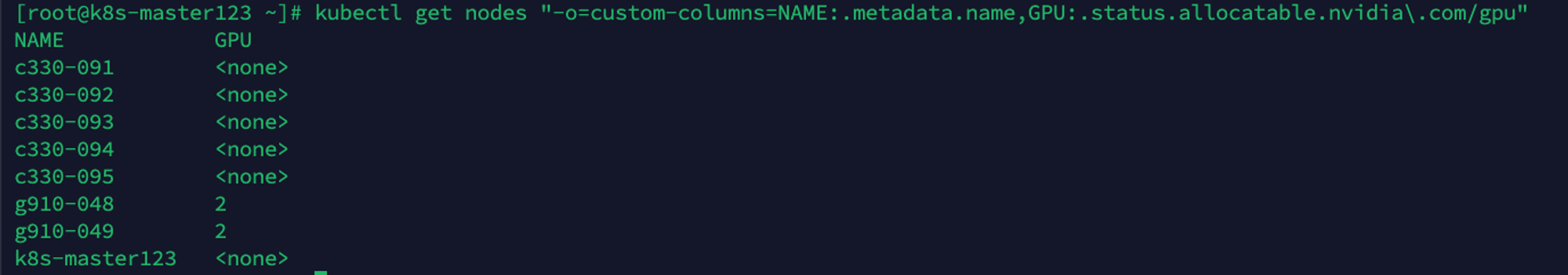

- GPU 코어 상태 확인

kubectl get nodes "-o=custom-columns=NAME:.metadata.name,GPU:.status.allocatable.nvidia\.com/gpu"

- GPU 코어 할당 테스트

apiVersion: v1

kind: Pod

metadata:

name: gpu-pod

spec:

restartPolicy: Never

containers:

- name: cuda-container

image: nvcr.io/nvidia/k8s/cuda-sample:vectoradd-cuda10.2

command:

- sleep

args:

- '1000'

resources:

limits:

nvidia.com/gpu: '1'

- GPU 할당 확인

kubectl describe nodes "GPU 노드 이름"

반응형

'kubernetes' 카테고리의 다른 글

| Kubernetes Nginx Ingress Controller & Ingress 설치 및 구성 (0) | 2024.12.03 |

|---|---|

| Kubernetes Containerd 데이터 경로 변경 (0) | 2024.08.09 |

| Kubernetes CronJob (0) | 2024.07.05 |

| Kubernetes Postgres DataBase Backup (0) | 2024.07.05 |

| k3s 설치 가이드 (0) | 2024.06.13 |

댓글